SEO Requirements for a New Website: What to Include in the Technical Specification

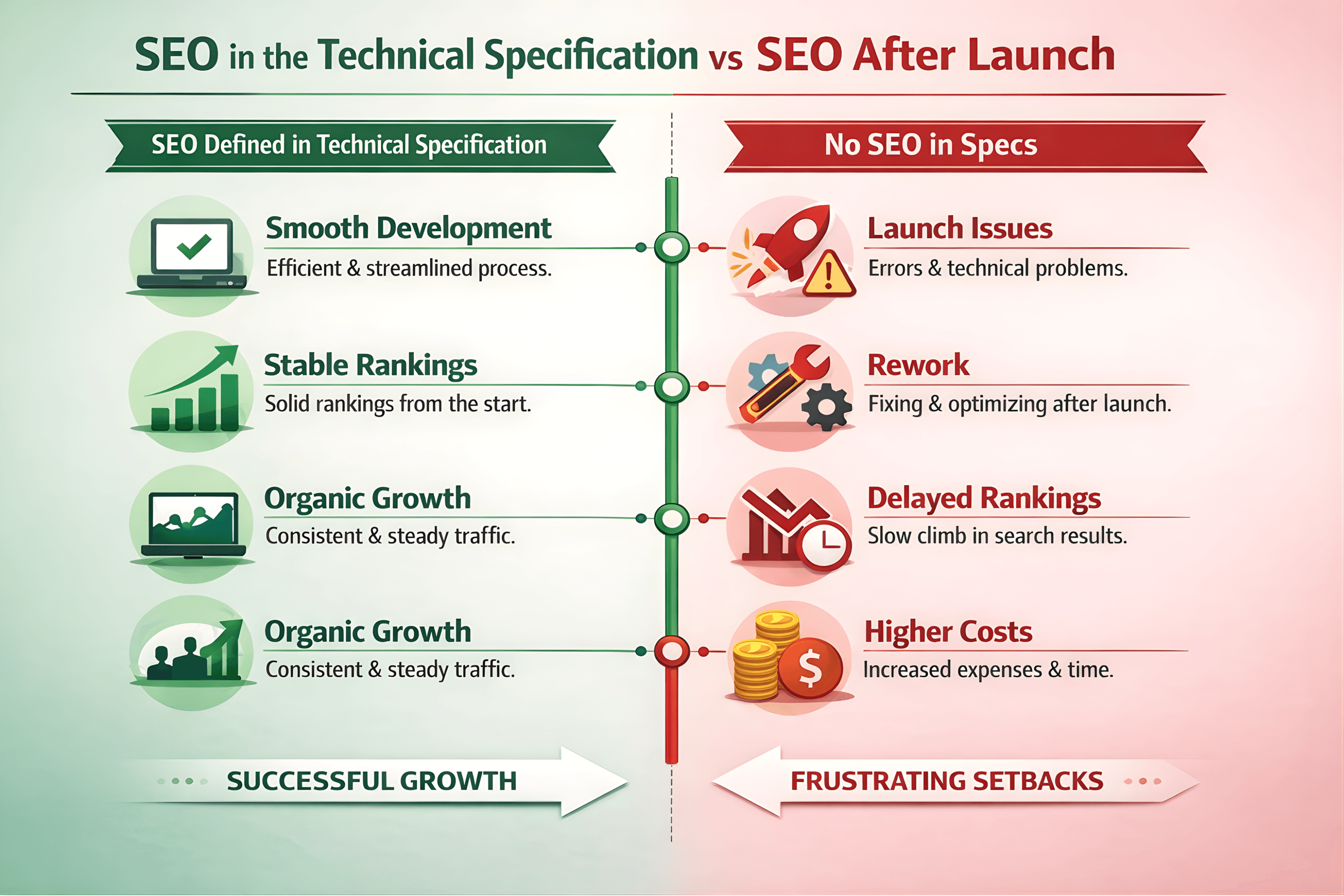

Launching a new website today isn’t just a design-and-build exercise. If people can’t find it, it won’t deliver much value - no matter how polished it looks or how fast it ships. That’s why SEO requirements belong in the technical specification from the very beginning, not tacked on after launch.

Search engines judge new sites largely through technical signals: how the site is structured, how quickly it loads, whether key pages can be crawled and indexed, and whether the content is described clearly through metadata and structured data. When those foundations aren’t planned during development, even great websites can hit a wall - visibility grows slowly, fixes become expensive, and teams end up rebuilding things that could have been done right the first time.

Many early SEO problems on new websites don’t come from “bad content.” They come from preventable technical decisions like messy URL structures, blocked crawling, missing redirects, duplicate pages, slow templates or incomplete schema. Putting SEO into the technical spec gives every team a shared checklist and a clear definition of “done,” so search visibility is treated as a core requirement alongside performance, security and usability.

Why SEO Requirements Must Be Part of the Technical Specification?

A lot of businesses still treat SEO as something you “do later,” once the site is live. The problem is that SEO isn’t a plugin you add at the end - it’s baked into how a website is built. If the site’s structure, templates and rendering approach aren’t search-friendly from the start, you can launch a perfectly functional website that simply doesn’t show up where it needs to.

That’s because the choices made during development - URL structure, server-side vs. client-side rendering, load speed, internal linking, and whether content is accessible to crawlers - directly influence how search engines crawl, index and rank your pages. When SEO is ignored at this stage, teams often discover after launch that key pages can’t be indexed, important content isn’t being rendered properly, or the site is too slow to compete.

And fixing these issues later usually costs more than doing them upfront. Teams often end up reworking templates, rebuilding navigation, adjusting URL patterns, cleaning up duplicates caused by parameters or pagination and chasing performance problems that show up in tools like Core Web Vitals.

When in-house SEO experience is limited, partnering with a trusted SEO team ensures the technical specification includes performance, indexation and structured data requirements from the start.

From a practical standpoint, SEO requirements in the technical spec should clearly define:

Which pages must be indexable (and when they should not be)

How metadata and headings are generated and managed

What performance benchmarks must be met before release

How content is rendered so it can be crawled reliably

Which structured data types are required (and where they must appear)

Companies often rely on experienced agencies like LuxSite to ensure these specifications are comprehensive and aligned with current SEO best practices.

SEO Goals and KPIs to Define in the Technical Specification

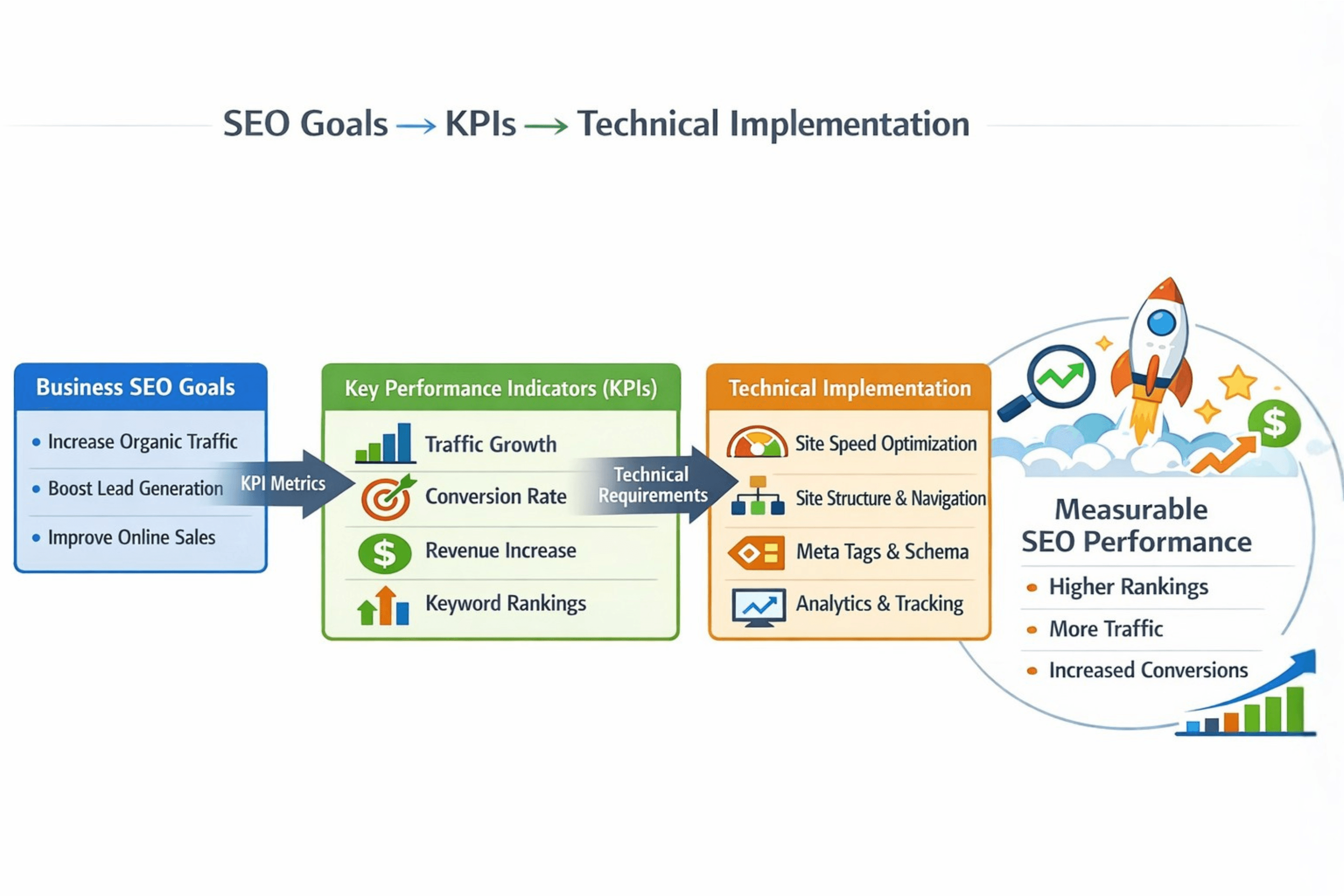

Locking in SEO goals and KPIs while you’re writing the technical specification keeps everyone building toward the same finish line. Instead of vague expectations like “we want traffic,” you get clear targets that influence architecture, performance work, and template decisions - and you also get a clean way to judge whether the launch is actually successful.

Aligning Business Objectives with SEO Goals

SEO shouldn’t exist in a vacuum. A SaaS startup trying to generate demos will need a different approach than an enterprise site rolling out new country or language versions. The technical spec should spell out the business context so the implementation isn’t generic or misaligned.

Common business-driven SEO goals include:

Increasing qualified organic traffic

Capturing non-branded search demand

Supporting lead generation or product conversions

Expanding into new geographic or language markets

Building topical authority in a competitive niche

Once these are documented early, they naturally steer technical choices like site structure, page templates, internal linking rules and how easily content can scale.

Defining Measurable SEO KPIs

KPIs turn goals into something you can track and defend. They should be realistic, measurable, and tied to the tools you plan to configure as part of the technical setup (so there’s no “we’ll figure reporting out later”).

Here’s a practical set to include:

SEO Goal | KPI | Measurement Tool | Why It Matters |

Increase organic visibility | Keyword ranking distribution | Ahrefs / SEMrush | Measures search competitiveness |

Improve discoverability | Indexed pages ratio | Google Search Console | Detects crawl/index issues |

Enhance site performance | Core Web Vitals scores | PageSpeed Insights | Direct ranking and UX signal |

Drive organic traffic | Organic sessions growth | Google Analytics 4 | Indicates SEO traction |

Improve engagement | Bounce rate / engagement time | Google Analytics 4 | Reflects content & UX quality |

Support conversions | Organic conversion rate | Google Analytics 4 | Links SEO to business results |

Including these criteria in the technical specification prevents subjective decision-making and ensures SEO quality is verifiable.

Website Architecture & URL Structure Requirements

Website architecture and URL structure are the “bones” of technical SEO. They shape how easily search engines crawl your pages, how authority flows through internal links, and how intuitive the site feels for real users. And once these choices are baked into development, changing them later usually means redirects, rework, and lost time - so they belong in the technical specification from the start.

Logical and Scalable Site Architecture

Search engines (and users) respond best to a clear hierarchy that mirrors how your content is organized. Ideally, important pages should be reachable in a few clicks - often three or fewer from the homepage.

Architecture requirements to define in the spec:

Flat hierarchy where possible to reduce crawl depth and speed up indexation

Category-based structure that groups related content cleanly

Navigation that matches the hierarchy (menus reflect real page structure, not just design choices)

Scalability so you can add new sections later without restructuring everything

On content-heavy sites - blogs, marketplaces, SaaS resource hubs - structure also affects how link equity is distributed. Pages buried deep tend to get crawled less often and take longer to rank.

Internal Linking Requirements

Internal linking isn’t just a UX detail - it’s one of the strongest signals you control. Your technical spec should spell out how internal links work so they’re consistent across templates and content types.

Include requirements for:

How links are created (manual, automated modules, related-content blocks, etc.)

Rules for contextual links inside body content

Breadcrumbs (format, placement and behavior)

Pagination logic (prev/next, and view-all only if it’s truly needed and performant)

Done well, internal linking improves discoverability, strengthens topical relevance, and helps search engines understand how pages relate.

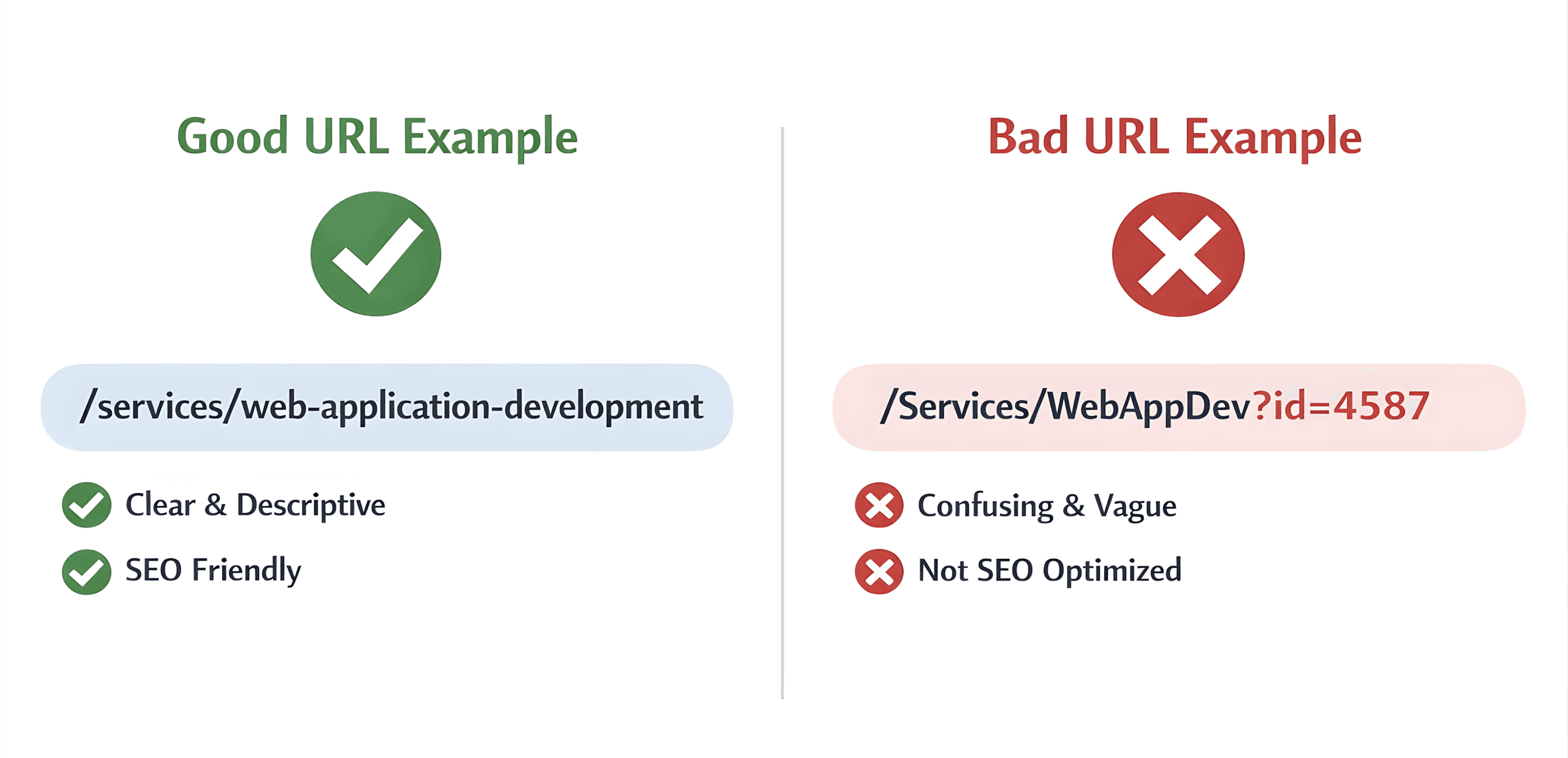

URL Structure Rules

URLs act as both ranking signals and usability indicators. Clean, descriptive URLs improve click-through rates and make content easier to manage. Mandatory URL requirements to document include: lowercase characters only, hyphen-separated words (no underscores), descriptive slugs reflecting page content, no session IDs or tracking parameters in indexable URLs and consistent trailing slash policy (with enforced redirects).

Additionally, the technical specification should define URL behavior for pagination, filters and faceted navigation, search result pages and archived or deprecated content.

Incorrect handling of these elements is a leading cause of duplicate content and crawl inefficiency.

Canonicalization and Duplicate Content Prevention

Modern websites often generate multiple URLs for the same content. Without strict canonical rules, search engines may split ranking signals across duplicates.

The technical specification must clearly define canonical tag usage rules, preferred protocol (HTTPS), preferred domain (www vs non-www) and parameter handling strategy.

All non-canonical URLs should resolve via 301 redirects or canonical tags to the primary version.

Architecture & URL SEO Requirements

Website architecture and URL structure define how search engines and users navigate your website. Clear hierarchy, clean URLs and consistent technical rules help search engines crawl and index pages efficiently, while also improving usability and click-through rates.

Element | Requirement | SEO Purpose |

Crawl depth | ≤ 3 levels | Faster indexation |

Navigation structure | Reflects hierarchy | Improved crawlability |

URL format | Clean & descriptive | Higher CTR, clarity |

Canonical tags | Mandatory | Duplicate control |

Breadcrumbs | Structured + schema | Better SERP appearance |

A well-defined architecture and URL strategy helps prevent inefficient crawling, duplicate content issues and extra restructuring costs.

Indexation & Crawlability Requirements

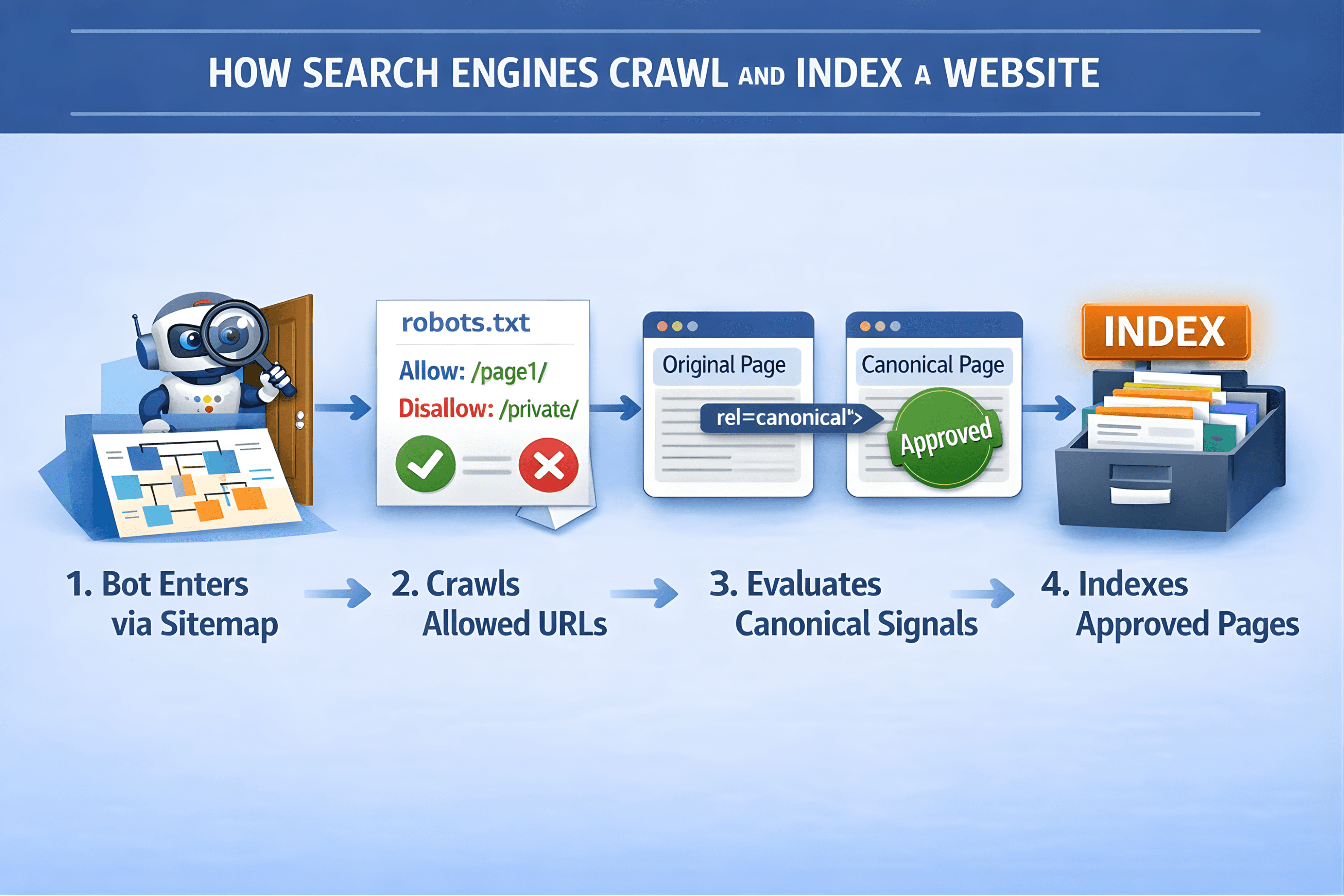

Indexation and crawlability decide whether your pages can show up in search results at all. You can have great content and a beautiful design, but if search engines can’t crawl, render, or index the site properly, organic traffic won’t follow. That’s why indexation rules should be treated as core technical requirements - and written into the technical specification, not left to “SEO later.”

Robots.txt Requirements

The robots.txt file controls how search engine bots interact with the website. While it is often treated as a simple configuration file, incorrect directives can unintentionally block entire sections of a site from being indexed.

The technical specification should define:

Which directories must remain fully crawlable

Which technical or private sections must be blocked

Rules for staging and development environments

Search engine-specific directives, if applicable

Meta Robots Directives

In addition to robots.txt, individual pages may require granular control using meta robots tags.

Common use cases include:

noindex for internal search results or filtered pages

nofollow for user-generated or untrusted links

noimageindex where image visibility is restricted

The technical specification must clearly define which page types are indexable and which are not, preventing accidental deindexation of valuable content.

XML Sitemap Requirements

XML sitemaps act as a direct communication channel between the website and search engines. They help search engines discover new or updated content faster and understand which URLs are intended for indexation.

Mandatory sitemap requirements:

Only canonical, indexable URLs included

Automatic updates on content changes

Separate sitemaps for large sites (e.g., blog, pages, media)

Submission to Google Search Console

Research shows that well-maintained sitemaps improve crawl efficiency, especially for new or large websites.

Pagination, Filters and Faceted Navigation

Dynamic websites often generate multiple URLs for similar content through pagination, filtering, or sorting. If not controlled, these URLs can flood search engines with low-value pages.

The technical specification should define canonical rules for paginated content, whether paginated pages are indexable, parameter handling strategy and noindex rules for filter combinations.

Failure to manage these elements is one of the most common causes of duplicate content and crawl budget waste.

Indexation & Crawlability Requirements Checklist

We've compiled a checklist table for you to keep in mind all indexation and crawlability requirements we’ve mentioned above.

SEO Element | Requirement | SEO Purpose |

robots.txt | Clear crawl rules | Prevent accidental blocking |

Meta robots tags | Defined by page type | Control indexation |

XML sitemap | Canonical URLs only | Faster discovery |

Canonical tags | Mandatory | Duplicate prevention |

Pagination handling | SEO-safe logic | Crawl efficiency |

Indexation is not a one-time task. The technical specification should require ongoing monitoring using: Google Search Console coverage reports, crawl error tracking and indexation validation after releases.

Technical SEO Performance Requirements (Core Web Vitals)

Performance isn’t something to “tune later.” Google’s page experience signals (which include Core Web Vitals) have been part of Search ranking systems since the Page Experience rollout in 2021, and slow pages also tend to leak users before they ever convert. If performance matters to visibility and revenue, it belongs in the technical specification - so it’s engineered into templates, hosting and frontend delivery from day one.

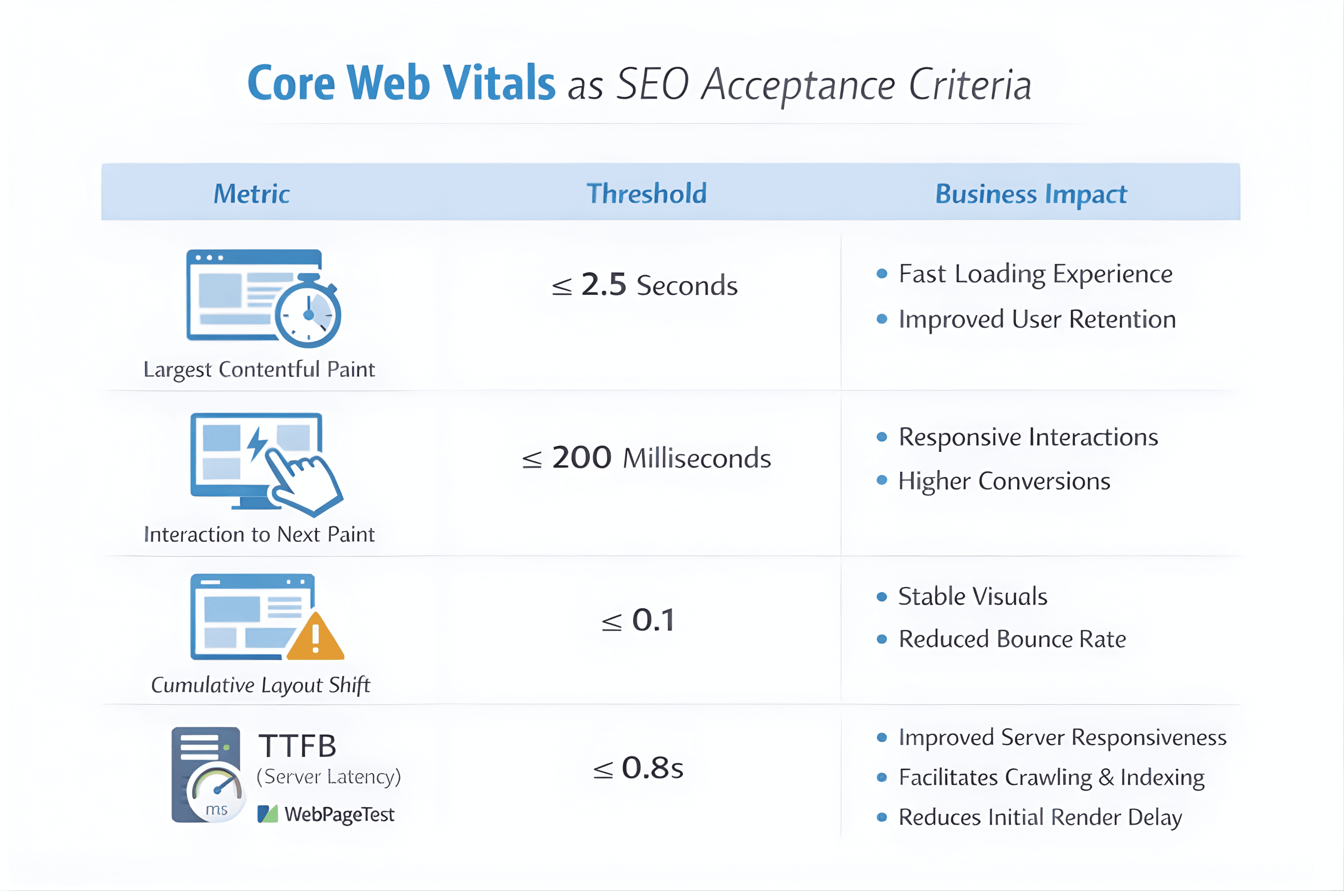

Mandatory Core Web Vitals Benchmarks

The following thresholds align with Google’s latest public guidance (2024):

The spec must require these metrics to be met on: mobile, real-user data (field), majority of pages and production environment. This prevents scenarios where only the homepage “passes.”

Server & Network Requirements

Performance starts at the network layer. Technical specs should define:

Hosting (SSD + HTTP/3 support)

CDN (Mandatory edge caching)

TLS (TLS 1.2+)

DNS (Low-latency provider)

TTFB target (< 800 ms globally)

Websites failing on TTFB often fail Core Web Vitals even if the following frontend parameters are optimized to avoid bloating post-launch: сritical CSS inlined, non-critical JS deferred, unused CSS purged, images lazy-loaded, fonts preloaded and no layout shifts from media are found.

Media Optimization Requirements

Media files often make up the largest part of a website’s payload, so it’s crucial to define formats and processing standards in the technical specification. Images should use modern formats like WebP or AVIF, with JPEG or PNG as fallback options. Videos should be in MP4/H.264 format, loaded lazily and without autoplay on mobile devices. Icons are best implemented as SVG, preferably inline for faster rendering. Thumbnails and other derived media should have automated sizing controlled through the CMS to ensure consistency and performance.

Summing it up, Google’s 2024 documentation confirms that Core Web Vitals impact search visibility, while a Deloitte study shows that reducing load time by just 0.1 seconds can increase conversions for retail brands by up to 8%. Defining measurable and testable performance criteria at the specification stage reduces future optimization costs and ensures the website does not lose organic potential before it even launches.

On-Page SEO Requirements in the Technical Specification

On-page SEO is about making each page easy for search engines to understand and easy for users to engage with. When rules for metadata, headings and internal links are built into templates from the start, teams avoid messy inconsistencies after launch and the site is more likely to index cleanly and perform well in search.

Meta Tags Rules

Meta tags are the first elements search engines and users interact with in SERPs. The technical specification should define strict rules to maintain consistency and SEO effectiveness:

Element | Requirement | Purpose |

Title Tag | 50–60 characters, unique per page, include primary keyword | Improves CTR and relevance signals |

Meta Description | 120–160 characters, compelling summary, unique | Influences click-through rates |

Dynamic Meta Generation | Use templating rules for category, product, blog pages | Avoid duplicate titles/descriptions across large sites |

Best Practices to Include in TS:

Ensure that titles and meta descriptions are auto-generated for scalable pages (e.g., e-commerce categories) while allowing manual overrides for high-priority content.

Define rules for keyword placement (beginning of title preferred, natural language).

Avoid keyword stuffing; ensure readability for humans.

Data Insight: Moz study (2024) shows pages with optimized, unique meta descriptions receive on average 5–10% higher CTR compared to generic or duplicated meta tags.

Heading Structure (H1–H6)

Heading tags organize content for search engines and users, signaling hierarchy and topical relevance. The TS must specify:

H1: Only one per page; reflects primary keyword/topic.

H2–H6: Used to structure subtopics, maintain semantic order.

Consistency Rules: Automatically generated headings for templates must not conflict with SEO strategy.

Implementation Guidelines:

Define a clear hierarchy: H1 → H2 → H3 → H4/5/6.

Avoid skipping levels unless justified by design.

Use heading tags for content hierarchy, not purely for styling.

Recommended Schema for Headings:

Page

├─ H1: Main Topic

├─ H2: Subsection

│ ├─ H3: Detail

│ └─ H3: Detail

└─ H2: Subsection

└─ H3: Detail

Internal Linking & Anchor Text Rules

Internal linking signals relationships between pages and helps distribute link equity. The TS should specify:

Anchor Text Guidelines: Descriptive, contextually relevant, avoid exact-match keyword stuffing.

Mandatory Linking Rules:

Link from category to product pages.

Use breadcrumbs for navigational hierarchy.

Ensure no orphan pages exist.

Internal Linking Implementation

Requirement | Description | Purpose |

Contextual Links | 2–5 links per content block | Helps search engines understand relevance |

Breadcrumbs | Structured + Schema | Improves UX & SERP appearance |

Link Depth | ≤ 3 clicks from homepage | Supports crawl efficiency |

By defining on-page SEO requirements in the technical specification, teams gain a unified roadmap for content structure, metadata and rendering strategies. This reduces post-launch errors, ensures better indexing and directly supports search visibility and conversion optimization.

Structured Data & Schema Markup Requirements

Structured data (schema markup) gives search engines clearer context about what’s on a page. When it’s implemented правильно, it can unlock richer search appearances - like breadcrumb trails, product details, FAQs or article previews - and reduce ambiguity about things like authorship, dates, and entities. Defining schema requirements in the technical specification helps teams implement it consistently across templates instead of adding it unevenly (or forgetting it) after launch.

Mandatory Schema Types

Schema Type | Applicable Pages | Purpose |

Organization | All pages | Identifies website as a legitimate entity; improves brand trust |

Breadcrumb List | Category and product pages | Enhances SERP appearance with navigational breadcrumbs |

Article / BlogPosting | Blog, news or article pages | Signals content type, publication date, author, and featured images |

Product | E-commerce product pages | Enables rich snippets like price, availability, reviews |

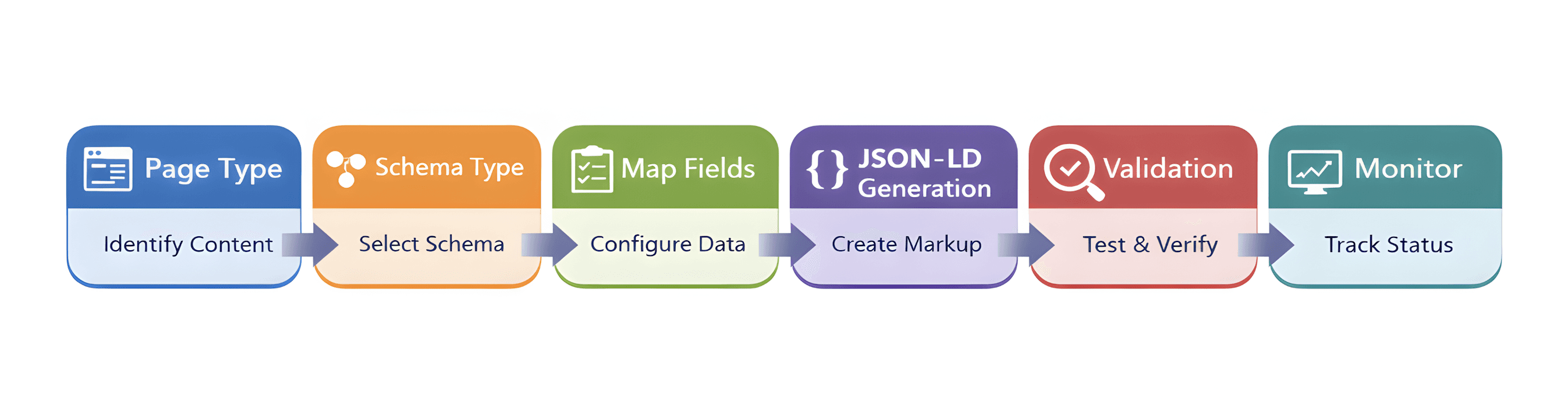

Schema Implementation Flow

Identify Page Type → Article, Product, Category, Homepage.

Select Appropriate Schema → Organization, Breadcrumb, BlogPosting, Product.

Map Content Fields → Titles, descriptions, images, authors, dates, prices, reviews.

Generate JSON-LD → Integrate via CMS template or manually for static pages.

Validate → Google Rich Results Test; fix errors/warnings.

Monitor → Continuous monitoring for new pages, updates, or schema errors in Search Console.

Experts highlight that websites that correctly implemented schema markup saw up to 30% higher CTR in SERPs for rich-result eligible pages compared to pages without schema. By including structured data requirements in the technical specification, websites can fully leverage SERP features, improve click-through rates and ensure search engines understand their content accurately from the moment of launch.

Analytics, Tracking & SEO Monitoring

Good SEO decisions come from clean data. If tracking is patched in at the end - or implemented differently across templates - you lose visibility right when it matters most (launch and the first few weeks after). Putting analytics and monitoring requirements into the technical specification makes tracking consistent, helps teams spot issues early and creates a clear baseline for evaluating SEO performance.

Mandatory tools typically include Google Analytics 4 for traffic and engagement, Google Search Console for crawl and indexing insights and optionally SEO audit tools like Ahrefs or SEMrush.

The TS should define the key events to track like: broken links (404 pages), redirect chains, page load errors, canonical/hreflang issues, and sitemap submission coverage. Monitoring these metrics ensures that technical SEO problems are identified and resolved before they impact organic traffic.

Best Practices:

Validate tracking codes and event triggers pre-launch, check crawl errors daily during the first 30 days, and perform ongoing audits post-launch.

Set up automated alerts for critical SEO events, such as spikes in errors or drops in indexed pages.

Assign responsibilities across teams: developers handle technical issues, content teams ensure meta/headings compliance, and marketing/SEO manages overall performance monitoring.

According to recent research, websites that actively monitor GA4 and GSC post-launch resolve technical SEO issues 20–25% faster and achieve higher organic traffic growth within the first three months.

Common SEO Mistakes in Technical Specifications (And How to Avoid Them)

Even experienced teams sometimes miss critical SEO requirements during website planning. Documenting these common mistakes in the technical specification can prevent costly post-launch issues.

By proactively addressing these issues, teams reduce rework, maintain consistent SEO quality and improve the site’s search visibility immediately after launch.

Bottom Line: SEO Technical Specification Checklist

To summarize, a comprehensive SEO technical specification ensures that every aspect of your website - from architecture to performance, content, and monitoring - is designed to maximize search visibility and user experience. Below is a one-page checklist you can include in the TS:

Category | Key Requirements |

Architecture & URL Structure | Logical hierarchy ≤3 levels, flat & scalable architecture, clean descriptive URLs, canonical rules, breadcrumb schema |

Indexation & Crawlability | Robots.txt & meta robots rules, XML sitemaps, pagination & filter handling, no duplicate content |

Performance & UX | Core Web Vitals thresholds, TTFB ≤0.8s, mobile-first, CDN & caching, optimized images & media |

On-Page SEO | Unique titles & meta descriptions, heading hierarchy (H1–H6), SSR vs CSR rules, internal linking & anchor guidelines |

Structured Data | Mandatory schema types (Organization, Breadcrumbs, Article/Product), JSON-LD format, validation & monitoring |

Tracking & Monitoring | GA4, GSC, technical event tracking, crawl & indexation reports, performance monitoring, automated alerts |

Building a website without embedding SEO requirements in the technical specification is like constructing a house without a blueprint - it may stand, but it won’t reach its full potential. A carefully crafted TS with SEO baked in ensures better search rankings, faster indexation, higher engagement and measurable business outcomes.

At Codeska, we help startups, SMBs and enterprise teams create technical specifications that bring SEO into the development process from day one. We connect business goals with practical technical requirements - so developers know what to build, marketers know what to expect and the finished website is ready to perform in organic search from the moment it goes live.

Let’s build your search-optimized website together - contact Codeska today and turn your technical specification into a growth engine.